New website will fight your corner.

Report Harmful Content, the UK’s national reporting centre for harmful online content, based in Exeter, has reported an “alarming” spike in reports of online fraud with the intention of causing reputational damage and emotional distress.

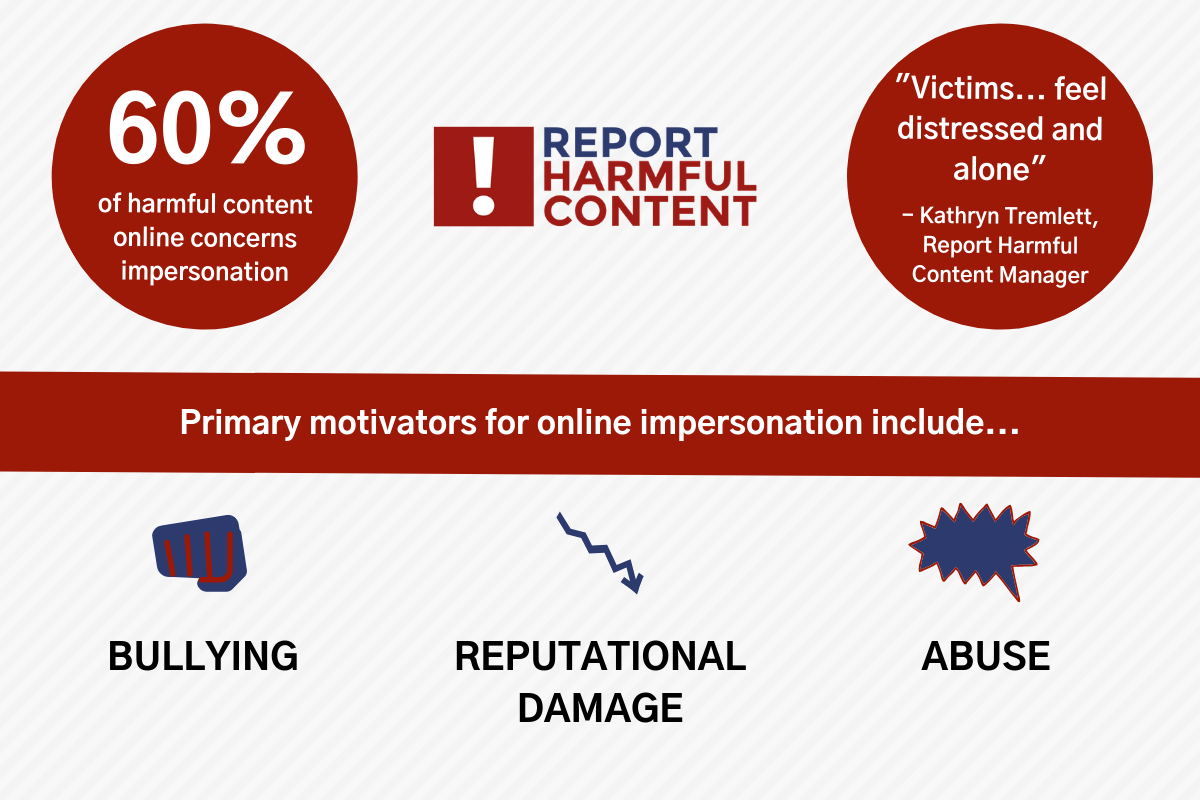

As part of a wider campaign to raise awareness of this growing issue, Report Harmful Content has publicised data revealing that 60% of reports it has received since April 2019 have concerned impersonation. The following stories show the complexity surrounding this latest issue in online fraud.

Names have been changed to protect clients’ privacy.

James contacted Report Harmful Content in April 2019 with a distressing and difficult experience of impersonation:

“I had a big fall out with a friend of mine earlier in the year. She was really angry with me and wanted to hurt me. She set up a fake social media profile pretending to be me and messaged all my friends telling them that I had an STI. She also added my boss as a friend and posted updates saying I was hungover at work when I wasn’t. I got a caution and could have lost my job. My friend wanted to ruin my life!”

Online impersonation is a common means of continuing abusive behaviour within the context of romantic relationships. Leah contacted the centre to report abusive and distressing online impersonation from an ex-partner:

“I had a restraining order on my ex. I was beginning to get my life back in order when he created a fake profile on social media and started harassing me through it. I felt like I couldn’t escape him and that I’d never be rid of him.”

Kathryn Tremlett, Manager of Report Harmful Content, commented on the rise in online impersonation, stating:

“One of the main reasons we set up Report Harmful Content was to help people who were going through this. Often, these complex and personal impersonation attacks require a human eye to look over them and pick up the broader context which an automated reporting process may miss.

At Report Harmful Content, we have specialist practitioners who advise on how to report impersonation attacks to social media, and can also intervene and mediate with industry contacts when user reports have been unsuccessful.

Most importantly, we listen and we understand! Victims of online impersonation often feel distressed and alone. We empathise with our clients and help them reach a resolution which feels empowering to them.”

Social media platforms, such as Facebook and Instagram, have strict community guidelines which ban impersonation accounts and also enable users to report impersonation through their platforms. The issue, according to Tremlett, is that reports are “not always assessed by a human which makes it very difficult for impersonation accounts to be detected, particularly if they have been set up by someone known to the victim, as these accounts appear very real.”

For help and advice click here.

Dartmoor phone mast gets the go-ahead

Dartmoor phone mast gets the go-ahead

Livestock processing plant refused at Shebbear

Livestock processing plant refused at Shebbear

Devoncast - New lives for two Devon landmarks and the mysteries of AI

Devoncast - New lives for two Devon landmarks and the mysteries of AI

Two Devon warships could be sold to Brazil

Two Devon warships could be sold to Brazil

Devon police dog honoured

Devon police dog honoured

Schizophrenic knifeman given indefinite hospital order

Schizophrenic knifeman given indefinite hospital order